ESI Exclusive: Dave Smith, VFX supervisor at Sony Imageworks, talks about the amazing effects of THE AMAZING SPIDER-MAN - Part 2

Article Cinéma du Jeudi 19 Juillet 2012

Interview by Pascal Pinteau

How did you work with Andrew Garfield in order to make his digital double move just like him ?

The movement of the animated Spider-Man was truly an artistic challenge, rather than a technical “solution.” Andrew was very connected to (and passionate about) a very specific movement style for Spider-Man. Apparently, he has always been a big fan of the property. The animation team studied Andrew’s movement style by sifting through volumes of reference material of Andrew both on-and-off the set. Once the animation team demonstrated that they were able to emulate his performances, Marc Webb wanted to do more and more shots of Spider-Man as a CG character because he thought it was working so well. That movement style is pushed to broader extremes as he swings through the city. At that point, it was the stuntmen that served as a great inspiration for the look that Marc was after. Above all, the character needed to feel real – like there is real weight and physics at work.

Marc Webb said that filming actual stuntmen playing Spider-Man and moving on wires for the short scene under the Harlem bridge was a tremendous inspiration for the animation of the shots with the digital Spider-Man. Can you elaborate on that and explain how it changed the approach to animating Spider-Man?

The stuntman’s movement on the wire begins as a fluid, acrobatic swing punctuated by a brisk movement as he shoots and then transfers his weight to the next web-line, then returns to a fluid swing. Of course, the bridge scene was at a lower altitude - the higher Spider-Man is from the ground, the more fluid the entire motion becomes due to his increased acceleration. For instance, in the Crane Sequence (near the end of the film) Spider-Man’s motion is very fluid, very acrobatic because he has incredible momentum.

During the big action scenes, how much “Digital Spider-Man” is there compared to “practical Spider-Man” ? Would that be a 50/50 split ?

Marc envisioned a naturalistic and organic feel to Spider-Man, but he also wanted Spider-Man’s super-human powers to fully satisfy the audience’s expectations, and this was best accomplished through the use of a digital Spider-Man. Even so, it was important to maintain the organic quality to the look and movement of the character - taking cues from the photographed elements of Andrew Garfield or a stuntman. In many sequences we are intercutting shot to shot from CG Spider-Man to live action Spider-Man. In cases where an unmasked Andrew Garfield was visible, we would deploy the appropriate digital double.

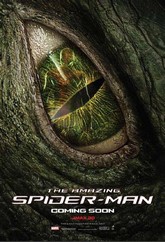

Can you describe the design and the animation process on the Lizard ?

To bring the lizard to life as a visual effect, we began by creating a digital version of the Legacy designed maquette. From here we looked to nature to ground the creature in reality. We photographed and studied real lizards and other animals and infused characteristics from these creatures into the look and movement of his skin and facial details. The Komodo dragon was heavily referenced due to its size, while fine details, like scale patterning and skin translucency were borrowed from smaller lizards like iguanas. The Lizard’s physique incorporates large muscle groups with areas of loose skin encasing it. Skin simulation and muscle dynamics were required on every shot for the Lizard. The Komodo dragons were inspiration for how the muscles moved beneath loose, scaled skin. Rhys Ifans was our inspiration for the Lizard’s character animation, and it was his video reference shot on set that became the source reference for the animation teams. This was especially true of the close ups, where very specific and subtle facial performances had to be executed with great attention to detail. This was also the case for the broader performances wherever possible. But once the performances go really extreme (in a violent fight scene, for example), it moves into the hands of the animators for an artistic interpretation of how the actor would execute those moves. Even in these instances, the animators draw from their knowledge of the character acquired from those subtle moments – always with an eye toward keeping the movement grounded and real – but also real in the sense of “in-character” based upon what they have learned about the character.

Have you created transformations effects on Rhys Ifans, to show the gradual metamorphosis of his character? How much did you blend visual effects with makeup effects?

There was always some level of makeup effects on Rhys when he was transforming. Some prosthetics were used in his initial transformation. Imageworks concentrated on the Lizard’s transformation back to Dr. Connors. As the Lizard reverts back to human form, his skin dries and withers, revealing layers of disintegrating skin. These effects were accomplished by using Houdini to procedurally tear and embed attributes into the outer transforming mesh. These attributes were then used by Maya's nCloth Solver to give the tearing skin a sense of realistic and varied motion, taking into account the weight, flexibility and age of the surfaces as it deteriorates. Separate meshes were dynamically created inside of Houdini and merged to the simulated layers to give true thickness to each piece. As the skin opens we reveal various layers of subcutaneous tissue, with each layer of tendon simulated one after another to ensure stability and prevent interpenetrations. Supporting particulate effects were derived from the tear points to create a sense of shedding dry epidermis in the form of a fine mist and flakes of skin. The same embedded attributes were also used to drive the shading aspects of the skin it as decomposes. On a creative level we pulled from several sources of inspiration to arrive at the final look: shed snakeskin, embryonic soft tissue, plastinated (Bodyworlds) shark husks to name a few. A mixture of Arnold's true ray-tracing and point cloud subsurface rendering methods were instrumental in capturing a sense of depth and translucency as the skin loses its sense of moisture. Putting all the pieces together we relied heavily on Nuke's 3D compositing tools to help blend all of our rendered layers together in the digital composite. The advantage of being able to augment the timing of transitions between different rendered looks was key to a quick turnaround between iterations.

The next part of this interview will be coming soon on Effets-speciaux.info !